Table of Contents

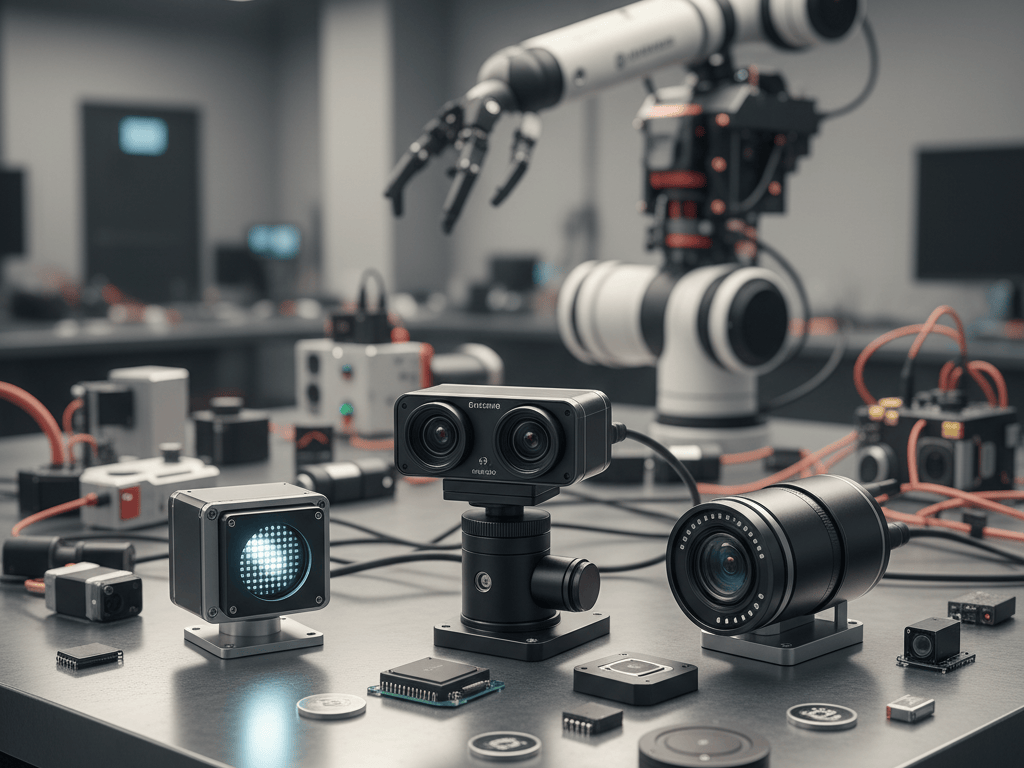

Robots are no longer the blind automatons of early industrial factories; vision sensors for robotics have given machines the ability to see, interpret and act upon the world around them. These sensors capture light or depth information and translate it into digital insights that control algorithms can understand, giving robots the “eyes” they need to navigate warehouses, pick products from cluttered bins and collaborate safely alongside humans.

The TechNexion report on camera evolution stresses that vision is essential for robotic autonomy because it supports obstacle avoidance and spatial awareness. At Yana Sourcing we believe a sourcing partner should do more than locate hardware on a price list; our high‑dimensional approach pairs clients with precisely the right vision sensors for robotics and technical know‑how, so every project benefits from sensors matched to its environment and goals.

The Role of Vision Sensors in Robotic Autonomy

Autonomous robots rely on perception to move intelligently, and vision sensors for robotics supply the raw data that becomes situational awareness. By converting photons into electrical signals, camera modules enable mapping, localization, object detection and fine‑grained manipulation. Research explains that vision is the foundation of obstacle avoidance and spatial understanding in robots, enabling agile locomotion and object interaction. Without vision sensors for robotics, a robot would stumble, misidentify targets or require elaborate external infrastructure such as beacons to navigate.

Whether deployed on rovers exploring disaster sites or cobots assisting in assembly lines, cameras and depth sensors empower machines to adapt to dynamic environments. Yana Sourcing’s high‑dimensional sourcing strategy ensures that the vision solution integrates seamlessly with other sensors (IMU, force‑torque, encoders) and computing platforms, maximizing autonomy and reliability.

Classes of Vision Sensors – Passive/Active, Digital/Analog, 2D/3D

To design a perception stack, engineers must understand the main categories of vision sensors for robotics. The Fiveable vision sensor guide distinguishes between passive sensors, which collect existing light, and active sensors that project a pattern or illumination. Passive examples include standard RGB cameras that rely on ambient lighting; active devices include structured light projectors and time‑of‑flight (ToF) sensors that emit modulated light and measure reflections.

Sensors can also be classified as digital or analog; analog cameras output a continuous voltage that must be digitized, while digital cameras integrate the analog‑to‑digital converter into the sensor so that pixel data is delivered as digital values. Another axis is 2D vs 3D. Two‑dimensional cameras provide only intensity information, whereas 3D sensors deliver depth by measuring disparity or time of flight. Yana Sourcing helps clients navigate this taxonomy, selecting vision sensors for robotics that match the required dimensionality, lighting conditions and integration complexity.

Camera Fundamentals: Image Formation, Optics and Sensor Technology

At the heart of vision sensors for robotics is the physics of image formation. A camera gathers light through a lens and focuses it onto a photodetector array, producing a two‑dimensional image. The Fiveable guide notes that pinhole and lens models illustrate how light rays converge to form images; lens apertures control the amount of light and depth of field. Lenses suffer from distortions (radial and tangential) that must be calibrated to achieve accurate perception.

Sensor size, pixel pitch and fill factor influence resolution and noise: larger sensors with bigger pixels capture more photons and produce higher dynamic range but may be bulkier. Camera chips are commonly based on CCD (charge‑coupled device) or CMOS (complementary metal‑oxide‑semiconductor) technology; CCD sensors historically offered lower noise but higher power and cost, while modern CMOS sensors deliver comparable performance at lower power and cost.

When Yana Sourcing evaluates vision sensors for robotics, we consider these optical and semiconductor parameters along with packaging, window materials and anti‑reflection coatings to ensure the chosen module delivers sharp images under real‑world conditions.

Monocular Cameras: The Baseline for Robot Vision

The simplest form of vision sensors for robotics is the monocular camera—a single lens capturing a sequence of 2D images. These cameras provide rich color and texture information, enabling object classification and pose estimation via computer vision algorithms. The TechNexion article notes that monocular cameras are widely used due to their compact size and low cost, but they cannot directly measure depth and must rely on techniques like structure‑from‑motion or machine learning for distance estimation.

Monocular sensors are ideal for tasks such as barcode scanning, visual servoing and surface inspection. To achieve full autonomy, robots often pair monocular cameras with other sensors like ultrasonic or infrared to gauge distance. Yana Sourcing recognizes that even these basic vision sensors for robotics vary widely in resolution (VGA to 8K), frame rate and dynamic range, and we match clients with sensors that strike the right balance between cost, field of view and optical quality.

Stereo Vision: Bi‑Cam Systems for Depth Perception

By placing two cameras at a known baseline, robots can triangulate the distance to objects, this is stereo vision. Passive stereo systems rely on ambient light and compute depth by matching pixels across left and right images, while active stereo uses an infrared projector to cast a dot pattern that improves texture in low‑contrast scenes. The TechNexion article explains that stereo vision is popular for robot navigation because it offers a good balance between cost and accuracy, but accuracy decreases with distance and performance suffers in featureless regions.

Stereo pairs must be carefully calibrated to estimate the camera extrinsics and rectify images. In robotics, stereo cameras are found in mobile platforms, drones and humanoid heads to generate real‑time point clouds for obstacle avoidance. When sourcing vision sensors for robotics that use stereo, Yana Sourcing evaluates baseline length, sensor synchronization and IP ratings to ensure reliable depth performance in the target environment.

Structured Light and RGB‑D Sensors: Projected Patterns for Dense Depth

Active 3D cameras often use structured light, projecting known patterns and measuring distortions to compute depth. This principle is used by early RGB‑D sensors like the original Kinect and by many modern modules. Structured light sensors can produce dense and accurate depth maps at close range and are robust to ambient light when using narrow‑band infrared filters. However they may struggle outdoors because sunlight saturates the sensor.

The TechNexion article notes that RGB‑D sensors combine a color camera and a depth sensor in one package, providing synchronized depth and color frames for tasks like hand‑eye coordination and object manipulation. Yana Sourcing matches clients with structured light vision sensors for robotics when they need high‑resolution depth at short distances, such as bin‑picking or human pose estimation, and ensures that illumination safety and heat dissipation are addressed.

Time‑of‑Flight Cameras: Measuring Light Travel Time

Time‑of‑flight (ToF) cameras estimate distance by emitting modulated light and measuring phase shift or flight time of photons. TechNexion explains that ToF sensors deliver fast depth measurements with simple correspondence (no complex matching) and are less sensitive to texture. Tangram Vision notes that ToF sensors behave like LiDAR but in a solid‑state array; they offer high frame rates and are good for obstacle detection, though there is a resolution–range trade‑off because depth uncertainty grows with distance.

ToF cameras are widely used in mobile platforms, agricultural robots, logistics and gaming devices for gesture control. Many ToF modules provide integrated ambient light rejection and depth scaling algorithms. Yana Sourcing works with manufacturers that deliver ToF vision sensors for robotics with consistent calibration, open SDKs and custom lens options, enabling clients to embed them in tight spaces or unusual orientations.

LiDAR Sensors: Long‑Range 3D Scanning

Light detection and ranging (LiDAR) sensors are an essential class of vision sensors for robotics that provide long‑range depth information by scanning a laser across the scene. LiDAR units can use mechanical spinning mirrors or solid‑state phased arrays to emit laser pulses and measure return time.

The TechNexion report notes that LiDAR offers extremely precise depth data and is used for mapping large spaces, navigation and obstacle detection for autonomous vehicles, drones and warehouse robots. However LiDAR is relatively bulky and expensive compared to cameras and ToF arrays, and some units produce sparse point clouds. Sourcing LiDAR involves balancing range, resolution, scan rate and safety class of the laser. Yana Sourcing works with leading LiDAR makers to deliver vision sensors for robotics that meet automotive reliability standards while being cost‑effective for industrial uses.

Event Cameras: Neuromorphic Vision for High‑Speed Tasks

Traditional frame‑based cameras capture complete images at fixed intervals, but event cameras operate asynchronously, outputting pixel‑level brightness changes as “events.” The University of Zurich’s Dynamic Vision Sensor (DVS) page describes how these bio‑inspired devices have microsecond latency, extremely high dynamic range and produce data only when something changes. Vision Systems Design notes that event sensors can generate the equivalent of 10,000 frames per second and reduce data volume by focusing on motion rather than static backgrounds.

These features make event cameras ideal for high‑speed tracking, manipulation and robotics tasks where latency and motion blur are critical, such as drone stabilization or catching a thrown object. Because event data is asynchronous, algorithms and hardware must be designed to process events rather than images. Yana Sourcing collaborates with research‑oriented clients to source event‑based vision sensors for robotics along with software toolchains and developer support, enabling cutting‑edge applications that go beyond frame‑based paradigms.

Thermal and Infrared Cameras: Seeing Heat and Light Beyond the Visible

Another category of vision sensors for robotics uses infrared radiation rather than visible light. Thermal cameras detect long‑wave infrared emitted by objects, enabling robots to see in complete darkness or through fog and smoke. The University of Michigan’s FCAV notes that thermal cameras eliminate shadows because they depend on emitted radiation and can image through obscurants, making them useful in search‑and‑rescue or nighttime surveillance.

However microbolometer sensors have slower response times and can suffer motion blur when mounted on fast moving robots. Near‑infrared and short‑wave infrared cameras can see features invisible to human eyes, such as moisture content in plants or identification marks on packaging. Yana Sourcing helps clients evaluate whether thermal or NIR/SWIR vision sensors for robotics will improve performance for tasks like crop monitoring or quality inspection, and ensures that appropriate lenses, filters and calibrations are included.

Emerging Vision Technologies: Polarization, Hyperspectral and AI‑Powered Cameras

The world of vision sensors for robotics continues to evolve. Emerging technologies such as polarization cameras, hyperspectral imaging and AI‑powered vision modules are pushing the boundaries of what robots can perceive. The TechNexion article highlights polarization cameras, which measure the orientation of light waves to determine surface properties like reflection and texture; these sensors can detect ice on runways or differentiate between matte and glossy materials.

Hyperspectral cameras capture dozens or hundreds of spectral bands, enabling robots to identify material composition, detect contaminants or classify crops based on chemical signatures. AI‑powered vision modules integrate deep neural networks directly onto the camera, delivering high‑level perception tasks such as object detection, segmentation and pose estimation on the edge. Quantum imaging and spin‑injection devices are early research technologies that may one day offer ultra‑low‑noise imaging. Yana Sourcing stays at the forefront of these innovations, ensuring our clients have access to novel vision sensors for robotics that give their robots capabilities beyond what standard cameras can achieve.

Technical Performance Parameters

Selecting the right vision sensors for robotics requires evaluating key performance metrics. Resolution determines how much detail a sensor captures; higher resolution improves object detection but increases processing load and may require more bandwidth. Frame rate indicates how quickly a sensor updates; robots performing high‑speed tasks need high frame rates to avoid motion blur.

Dynamic range measures a sensor’s ability to capture both bright and dark regions; outdoor robots dealing with sunlight and shadows require sensors with high dynamic range. For 3D sensors, depth accuracy and point density define how precisely distance is measured. Other important parameters include latency, exposure control, noise, linearity, temperature stability and interface protocol (USB, GigE, MIPI, CSI, LVDS). Yana Sourcing conducts deep technical analysis on these metrics for every vision sensors for robotics project to ensure that the camera’s capabilities align with system requirements and software stack capabilities.

Sensor Fusion and System Integration

No single modality is sufficient for robust autonomy; vision sensors for robotics are most effective when fused with inertial sensors, encoders and force sensors. Sensor fusion algorithms combine data from cameras, IMUs and other sensors to produce accurate estimates of position and orientation. Depth cameras provide spatial maps, while IMUs fill in motion between frames. For example, stereo or RGB‑D cameras may be combined with wheel encoders in an occupancy grid to localize a mobile robot.

Fusing event cameras with gyroscopes can deliver high‑speed orientation tracking for micro‑drones. The integration of these sensors requires careful consideration of frame synchronization, extrinsic calibration and time stamping. Yana Sourcing’s high‑dimensional service ensures that the vision sensors for robotics selected will integrate seamlessly with existing platforms, from ROS 2 drivers to proprietary embedded systems, and we work with clients to implement calibration routines and sensor fusion algorithms.

Manufacturing and Sourcing Insights

In a crowded market of modules and OEM boards, sourcing vision sensors for robotics demands expertise in both component technology and supply chain management. Manufacturers of camera modules must maintain clean‑room conditions for lens and sensor assembly, ensure proper alignment and apply anti‑reflective coatings. They must calibrate color balance, lens distortion and temperature drift.

Active sensors require safe, eye‑compliant illumination and robust packaging to handle heat dissipation. Yana Sourcing maintains relationships with global suppliers, from established camera companies such as Basler, IDS Imaging, FLIR and Cognex to specialized depth‑sensor makers like Intel RealSense, Orbbec, Photoneo, Zivid and Azure Kinect.

We also monitor Chinese OEMs that offer cost‑competitive modules with decent performance. When clients seek vision sensors for robotics through Yana, we evaluate manufacturer certifications (ISO 9001/TS 16949), production capacity, minimum order quantities, and sample lead times. Our high‑dimensional sourcing includes performance validation, factory audits and supply chain risk assessment, ensuring that customers receive quality sensors with long‑term support.

Major Manufacturers and Categories

The market for vision sensors for robotics includes numerous players across various categories. Global camera vendors like Sony, Omnivision and OnSemi design image sensors used by many camera module makers. Industrial camera manufacturers such as Basler and IDS provide robust GigE and USB cameras with long‑term availability. Depth sensor providers include Intel’s RealSense line (D400 and L515 series), Orbbec (Astra and Gemini), Photoneo (PhoXi 3D), Zivid (Zivid 2) and Microsoft Azure Kinect; these modules combine color and depth cameras using structured light or ToF.

LiDAR manufacturers range from Velodyne and Hesai to automotive suppliers like Valeo. For event cameras, companies such as Prophesee and IniVation lead the field with neuromorphic sensors. Thermal camera vendors include FLIR (Teledyne), Seek Thermal and Hikvision. By partnering with Yana Sourcing, clients gain access to this wide ecosystem of vision sensors for robotics and receive guidance on trade‑offs among cost, performance, reliability and ecosystem support.

Applications Across Industries

The deployment of vision sensors for robotics spans numerous sectors. In logistics, fixed and mobile robots rely on cameras and depth sensors to navigate warehouses, scan barcodes, and pick and place goods. In manufacturing, vision systems inspect products for defects, guide robot arms for assembly and monitor quality. In agriculture, cameras and multispectral sensors estimate crop health and steer autonomous tractors. Healthcare robots use depth cameras to detect human posture and environment for physical therapy or surgical assistance.

Autonomous vehicles integrate LiDAR, cameras and radar to perceive roads, traffic signals and pedestrians. Service robots in hospitality and retail use vision sensors to recognize guests and maintain inventory. Each application demands different characteristics: high resolution for inspection, low latency for navigation, or extended dynamic range for outdoor operation. Yana Sourcing’s high‑dimensional knowledge ensures that vision sensors for robotics are tailored to the specific requirements of each industry and use case.

Design Trends and Innovations

Innovation in vision sensors for robotics is rapid. Micro‑bolometer thermal cameras are becoming smaller and more affordable, enabling integration into consumer devices and drones. Solid‑state LiDAR is replacing mechanical scanning to improve reliability and reduce cost, while hybrid systems combine LiDAR with camera arrays for better scene understanding. Event‑based vision is moving from research to production in high‑speed vision tasks.

AI at the edge is transforming cameras into smart sensors that output detections and segmentation maps rather than raw images. The TechNexion article highlights that new modalities such as polarization and hyperspectral imaging are gaining popularity. Advances in photonic sensors and quantum technologies promise improved sensitivity and lower noise. Yana Sourcing remains at the forefront of these trends, regularly evaluating prototypes and working with early adopters to integrate advanced vision sensors for robotics into commercial products.

Comparative Selection Guide

Choosing among different vision sensors for robotics involves balancing many factors. Monocular cameras are inexpensive and offer high resolutions, but lack depth information. Stereo vision provides dense depth maps but requires careful calibration and loses accuracy at long range. Structured light cameras deliver precise depth at close range yet are susceptible to sunlight interference. ToF sensors are easy to integrate but may trade resolution for range. LiDAR excels in long‑range scanning but can be cost‑prohibitive for small robots.

Event cameras provide unmatched latency and dynamic range but require specialized algorithms. Thermal and infrared cameras enable perception in darkness and through smoke but lack the crisp detail of visible imaging. Emerging technologies like hyperspectral and polarization sensors offer unique advantages but may still be expensive or require domain‑specific processing. Yana Sourcing helps clients weigh these factors, recommending the best vision sensors for robotics based on budget, environment, integration complexity and performance targets.

Practical Sourcing Tips

When sourcing vision sensors for robotics, due diligence is critical. First, define the operating environment: indoors versus outdoors, lighting conditions, temperature range and potential contaminants (dust, moisture, vibration). Choose sensors with appropriate IP ratings and housing materials for harsh conditions. Verify that the sensor supports the required interface (USB 3.0, GigE Vision, MIPI CSI) and that driver software exists for your development platform. Request calibration data and test frames to evaluate optical quality, noise and depth accuracy.

For active sensors, review eye safety certifications (Class 1 lasers) and ensure thermal management is adequate. Compare lead times, minimum order quantities and warranty policies across suppliers. When evaluating Chinese OEMs offering cost‑effective modules, confirm quality control procedures and long‑term availability. Yana Sourcing’s high‑dimensional services go beyond price negotiations: we assist with specification definition, supplier vetting, sample evaluation and integration support, ensuring that vision sensors for robotics deliver reliable performance and minimize supply chain risk.

Conclusion: Empowering Robots with Vision and Sourcing Excellence

Vision sensors for robotics are the heart of perception, giving machines the ability to see, understand and interact with the world. They enable everything from simple barcode scanning to complex autonomous navigation. A wide variety of sensing modalities, monocular cameras, stereo vision, structured light, time‑of‑flight, LiDAR, event‑based sensors, thermal and emerging technologies, provide engineers with tools to build robots that operate safely and efficiently.

Understanding the physics, capabilities and limitations of each sensor type is crucial for choosing the right solution. Yana Sourcing’s high‑dimensional approach ensures that clients receive not just hardware but strategic guidance on sensor fusion, integration and supply chain. Our in‑depth knowledge of manufacturers, technologies and market trends allows us to deliver vision sensors for robotics that are tailored to each application, from industrial automation to healthcare and agriculture. If you are ready to elevate your robots’ perception, contact Yana Sourcing, our experts will help you navigate the complex landscape of vision sensor technologies, evaluate suppliers and integrate robust, future‑ready solutions that deliver exceptional performance.